What is Kubernetes? A Beginner’s Guide to Kubernetes on VPS

Sharma bal

Table of content

- 1. What is Kubernetes and Why Do We Need It?

- 2. Why Run Kubernetes on a VPS?

- 3. Use Cases for Kubernetes on VPS

- 4. Key Kubernetes Concepts Explained

- 5. Deploying a Simple Application on Kubernetes on VPS

- Conclusion: A Brighter VPS Future with AI

1. What is Kubernetes and Why Do We Need It?

Imagine deploying a web application. A few years ago, it might have been a single server. It’s often a collection of interconnected services running in its container today. Managing just a handful of these containers can quickly become a complex task. What happens when you scale to dozens, hundreds, or even thousands? That’s where Kubernetes on VPS comes in.

Kubernetes, often abbreviated as K8s, is an open-source platform designed to automate containerized applications’ deployment, scaling, and management. Imagine you have dozens, hundreds, or thousands of containers running different parts of your application. Starting, stopping, scaling, and monitoring each container would be an operational nightmare. Kubernetes simplifies this process by providing a centralized system to orchestrate these containers.

Here are some key problems Kubernetes solves:

- Complex Deployment: Manually deploying and managing numerous containers is error-prone and time-consuming. Kubernetes automates this process, ensuring consistent deployments and reducing manual effort.

- Scaling Challenges: As application demand fluctuates, you must scale your containers up or down accordingly. Kubernetes automates scaling based on resource utilization or other metrics, ensuring optimal performance and resource efficiency.

- Service Discovery and Load Balancing: Containers are ephemeral and can be created and destroyed dynamically. Kubernetes provides service discovery mechanisms that allow containers to find and communicate with each other, even as their IP addresses change. It also distributes traffic across multiple container instances to prevent overload.

- Self-Healing: If a container fails, Kubernetes automatically restarts it, ensuring high availability and minimizing downtime. This self-healing capability is crucial for maintaining application stability.

- Resource Management: Kubernetes optimizes resource allocation across your container cluster, ensuring each container has the necessary resources while preventing any single container from monopolizing resources.

Essentially, Kubernetes acts as a container orchestrator, managing the operational complexities of running containerized applications at scale. This allows developers to focus on writing code and delivering value.

1.1 Kubernetes vs. Docker: Understanding the Difference

It’s common to hear Kubernetes and Docker mentioned together, which can sometimes lead to confusion. While both are related to containerization, they serve different purposes.

Docker is a containerization runtime. It’s the tool for building, packaging, and running individual containers. Think of Docker as the engine that creates and runs the container. It packages an application and all its dependencies into a self-contained unit that can run consistently across different environments.

Kubernetes, on the other hand, is a container orchestration platform. It manages multiple containers across a cluster of machines. Think of Kubernetes as an orchestra conductor, coordinating the different instruments (containers) to play harmoniously.

Imagine you have a fleet of trucks (containers) that need to deliver packages (applications). Dockers are like individual trucks, capable of carrying and producing packages. Kubernetes is like the logistics manager coordinating the entire fleet, deciding which trucks go where optimizing routes, and ensuring timely delivery.

In practice, Docker accompanies Kubernetes. You use Docker to create the container images and then use Kubernetes to deploy and manage those images in a cluster. Kubernetes doesn’t replace Docker; it builds upon it, providing the infrastructure for running and managing containers at scale.

This distinction is crucial: Docker focuses on managing the lifecycle of individual containers, while Kubernetes manages the lifecycle of multiple containers across a distributed system.

2. Why Run Kubernetes on a VPS?

2.1 What is Kubernetes on VPS?

Simply put, Kubernetes on a VPS means deploying and managing your Kubernetes clusters on virtual private servers. Instead of relying on a managed Kubernetes service from a primary cloud provider or setting up a complex on-premises infrastructure with physical hardware (bare metal), you utilize the virtualized environment of a VPS.

A VPS provides a slice of a physical server, offering dedicated resources (CPU, RAM, storage) and a high degree of control over the operating system. This makes it suitable for running Kubernetes, which requires a stable and predictable environment. By installing Kubernetes components (like kubeadm, kubelet, and kubectl) directly onto your VPS instances, you create your own self-managed Kubernetes cluster.

2.2 Cost-Effectiveness of Kubernetes on VPS

One of the most compelling reasons for choosing Kubernetes on a VPS is its cost-effectiveness, especially when compared to other options:

- Compared to Major Cloud Providers: Managed Kubernetes services from cloud providers like AWS (EKS), Google Cloud (GKE), or Azure (AKS) offer convenience, but they often come with a significant price tag. You typically pay for the control plane (the Kubernetes master nodes), worker nodes, and other managed services. With a VPS, you only pay for the VPS instances themselves, giving you more control over your spending. You avoid the premium pricing associated with fully managed services.

- Compared to Bare Metal: Setting up a Kubernetes cluster on bare metal (your physical servers) involves substantial upfront investment in hardware, data center space, power, cooling, and IT staff. A VPS eliminates these capital expenditures and reduces operational overhead. You rent the hardware and infrastructure, making it a more accessible entry point for most organizations, particularly smaller teams and startups.

Kubernetes on a VPS strikes a balance between cost and control. You get the benefits of container orchestration without the high costs of managed services or the complexities of managing physical hardware. It is an excellent option for those who want to experiment with Kubernetes, run smaller-scale deployments, or have specific budget constraints.

2.3 Control and Flexibility with Kubernetes VPS

Beyond cost, running Kubernetes on a VPS offers a high degree of control and flexibility:

- Operating System and Customization: You have complete control over the operating system running on your VPS, allowing you to choose a Linux distribution compatible with your applications and customize the environment to meet your specific needs. This level of customization is often limited in managed Kubernetes services.

- Networking and Security Configuration: You have more granular control over network configuration, including firewalls, routing, and load balancing. This is essential for implementing custom security policies and optimizing network performance.

- Tooling and Integrations: You can install any tools and software you need on your VPS, allowing seamless integration with your existing development and deployment workflows. This flexibility can be crucial for integrating specific CI/CD pipelines or monitoring systems.

- Vendor Lock-in Avoidance: By managing your Kubernetes cluster on a VPS, you avoid vendor lock-in associated with specific cloud providers. You can easily migrate your cluster to a different VPS provider if needed.

This level of control and flexibility makes Kubernetes on a VPS an attractive option for developers and system administrators seeking high customization degree and want to avoid the constraints of managed services.

2.4 Kubernetes VPS vs. Managed Kubernetes Services

Choosing between Kubernetes on a VPS and a managed Kubernetes service depends on your specific needs and priorities:

| Feature | Kubernetes on VPS | Managed Kubernetes Service |

|---|---|---|

| Cost | Lower cost, especially for smaller deployments. | Higher cost, especially for larger deployments. |

| Control | Full control over OS, networking, and configuration. | Less control; provider manages core infrastructure. |

| Management | Requires more manual management of the control plane. | Provider manages the control plane; less operational overhead. |

| Scalability | Scalability depends on the underlying VPS infrastructure. | Highly scalable; provider manages scaling of the control plane. |

| Complexity | Steeper learning curve; requires more technical expertise. | Easier to get started; less technical expertise required. |

| Maintenance | You are responsible for maintaining the Kubernetes cluster. | Provider handles upgrades and maintenance of the control plane. |

A VPS is an excellent option if you prioritize cost-effectiveness, control, and customization and have the technical expertise to manage a Kubernetes cluster. A managed service might be a better fit if you prioritize ease of use, scalability, and minimal operational overhead and are willing to pay a premium.

3. Use Cases for Kubernetes on VPS

Kubernetes on a VPS unlocks various possibilities for deploying and managing applications. Here are some key use cases:

3.1 Hosting Web Applications with Kubernetes on VPS (H3)

Imagine you’re running a popular blog or an online store. You’ve built a great website, but now you must ensure it can handle the traffic, especially when you get featured in an article or run a big promotion.

Simplified Deployments

- No More Manual-Server Wrangling: Traditionally, deploying a web application involved manually configuring web servers (like Apache or Nginx) on each server and copying your application files. This is time-consuming and prone to errors, especially with multiple servers. With Kubernetes, you define your application’s desired state in a simple configuration file. Tell Kubernetes, “I want three copies of my website running,” and it will handle the rest. It ensures the correct number of instances (containers) are up and running, ready to serve your visitors.

Automatic Scaling

- Handle Traffic Spikes with Ease: One of the biggest challenges with web applications is handling traffic spikes. Imagine your blog post goes viral – suddenly, you have thousands of visitors trying to access your site. With Kubernetes, you don’t have to worry about manually adding more servers. Kubernetes can automatically scale your application based on traffic or resource usage. If traffic increases, more container instances are automatically spun up to handle the load. When the surge subsides, it scales back down, saving you resources and money. It’s like having an automatic traffic manager for your website.

Seamless Updates

- Keep Your Application Fresh Without Downtime: Updating a live website can be stressful. You want to deploy new features and bug fixes without interrupting the user experience. Kubernetes makes this easy with rolling updates. Instead of taking your entire website offline to update, Kubernetes gradually replaces old versions of your application with new ones. This ensures there are always enough running instances to handle traffic, resulting in zero downtime for your users. And if something goes wrong with the update? Kubernetes makes it easy to quickly roll back to the previous version.

Built-in Load Balancing

- Distribute Traffic for Optimal Performance: With multiple instances of your application running, you need a way to distribute incoming traffic evenly. Kubernetes has built-in load balancing that automatically distributes traffic across all running containers. This prevents any container from becoming overloaded, ensuring optimal performance and high availability for your users.

By using Kubernetes on a Hostomize VPS for your web applications, you get automated deployments, effortless scaling, seamless updates, and built-in load balancing.

Key Changes and Why They Work:

- Relatable Scenarios: Starting with “Imagine you’re running a popular blog or an online store” helps the reader connect with the use case.

- Focus on the “Why”: The explanations focus on the benefits for the user, explaining why each feature is essential.

- Analogies and Metaphors: Analogies like “automatic traffic manager” and “rolling updates” make complex concepts easier to grasp.

- Less Technical Jargon: The language is simplified, avoiding overly technical terms.

- Varied Sentence Structure: The sentences are structured differently to avoid repetition.

3.2 Microservices Architecture with Kubernetes VPS

Microservices architecture, where applications are composed of small, independent services, is a perfect match for Kubernetes:

- Simplified Management of Microservices: Kubernetes provides a centralized platform for managing all the different microservices in your application. It handles deployment, scaling, service discovery, and communication between these services.

- Isolation and Fault Tolerance: Each microservice runs in its own container, providing isolation and preventing a failure in one service from affecting others. Kubernetes’ self-healing capabilities ensure that if a microservice fails, it is automatically restarted.

- Efficient Resource Utilization: Kubernetes optimizes resource allocation across all microservices, ensuring that each service has the resources it needs without wasting resources.

- Independent Scaling: You can scale individual microservices independently based on their specific needs. This allows you to optimize resource utilization and improve overall application performance.

Running Kubernetes on a VPS provides a cost-effective and flexible platform for implementing and managing microservices architectures, enabling faster development cycles, improved scalability, and increased resilience.

3.3 Running Databases on Kubernetes on a VPS

Running databases on Kubernetes can be more complex than running stateless applications, but it offers several benefits:

- Simplified Management: Kubernetes can simplify the management of database deployments, including scaling, updates, and backups.

- High Availability: Kubernetes can provide high availability for databases by replicating data across multiple instances and automatically failing over to a healthy example if one fails.

- Resource Management: Kubernetes can optimize resource allocation for databases, ensuring they have the necessary CPU, memory, and storage resources.

However, there are some complexities to consider:

- Persistent Storage: Databases require persistent storage to ensure that data is not lost when containers restart. Kubernetes provides persistent volumes that can be used to store database data.

- StatefulSets: Kubernetes StatefulSets are designed to manage stateful applications like databases, providing stable network identities and persistent storage for each instance.

By carefully configuring persistent storage and using StatefulSets, you can effectively run databases on Kubernetes on a VPS, gaining the benefits of simplified management and high availability.

3.4 Other Potential Use Cases for Kubernetes VPS

Beyond web applications, microservices, and databases, Kubernetes on a VPS can be used for various other use cases:

- CI/CD Pipelines: Kubernetes can be integrated with CI/CD pipelines to automate application build, test, and deployment.

- Batch Processing: Kubernetes can be used to run batch processing jobs, distributing the workload across multiple containers.

- Machine Learning: Kubernetes can deploy and manage machine learning models, providing a scalable and efficient platform for running inference workloads.

- Game Servers: Kubernetes can be used to host game servers, providing dynamic scaling and high availability for online gaming experiences.

These are just a few examples of using Kubernetes on a VPS. The flexibility and control offered by a VPS, combined with the powerful orchestration capabilities of Kubernetes, make it a versatile platform for a wide range of applications.

4. Key Kubernetes Concepts Explained

To effectively use Kubernetes, it’s essential to understand its core components. Here’s a breakdown of the fundamental concepts:

It’s helpful to understand a few core ideas to get the most out of Kubernetes. Let’s break down the key players:

4.1 Nodes: The Workers of Your Kubernetes Cluster

Think of your Kubernetes cluster as a team of workers getting a job done. The nodes are those workers—the machines (physical servers or, in our case, your VPS instances) where your applications run. Each node is like a dedicated employee with specific tasks.

Each node has a few essential tools:

- kubelet: This is like the foreman on each worksite (node). It’s an agent that takes instructions from the central manager (the control plane, which we’ll talk about later) and ensures everything runs smoothly on its assigned node. It’s in charge of managing the pods (more on those next).

- Container Runtime: This is the engine that powers the actual work. Docker is a popular example; it creates and runs the containers. Other options, like containers and CRI-O, are also available.

- Kube-proxy: This is like the traffic controller, making sure network traffic gets routed to the right place (the correct pods).

So, in a Kubernetes setup on a VPS, each of your VPS instances acts as a node, providing the CPU, RAM, and storage where your applications live and operate.

4.2 Pods: The Smallest Deployable Units in Kubernetes

Now, what are these pods the kubelet is managing? A pod is the smallest unit you can deploy in Kubernetes. Imagine it as a single apartment or a small group of tightly coupled apartments within a building. A pod can house one or more containers that must work closely together.

While it’s possible to have a single container living alone in a pod (like a studio apartment), multiple containers often share a pod when they need to communicate frequently or share data. For example, a web application might have one container running the main web server (like Nginx or Apache) and another container handling logging or monitoring. These containers would live together in a pod, sharing resources like network connections and storage.

Here are some key things to remember about pods:

- They’re temporary: Pods are designed to be ephemeral, meaning they can be created, destroyed, and moved around by Kubernetes as needed. Don’t rely on them having a permanent address.

- They share resources: Containers within a pod share the same network (so they can talk to each other using localhost) and can share storage volumes.

- They have their own address: Each pod gets its own unique IP address within the cluster, allowing other parts of your application to find and communicate with it.

Pods are the essential building blocks of your application within Kubernetes.

4.3 Deployments: Managing Your Application’s Lifecycle

Now, how do you actually manage these pods? That’s where deployments come in. A deployment is like a manager overseeing your application’s desired state. You tell the deployment how many copies of your application (pods) you want running, and it ensures that happens.

Deployments offer some powerful features:

- Declarative Updates: Instead of manually updating each pod, you simply tell the deployment what you want the application to look like (e.g., “I want three replicas of version 2.0 of my app”). Kubernetes takes care of making it happen.

- Rolling Updates: Imagine updating a website without any downtime. Deployments make this possible with rolling updates. They gradually replace old pods with new ones, ensuring that there are always enough running instances to handle incoming traffic.

- Easy Rollbacks: Did you make a mistake with an update? No problem. Deployments make it easy to revert to a previous version of your application with a simple command.

- Automatic Healing: If a pod crashes for any reason, the deployment automatically spins up a new one to replace it, keeping your application running smoothly.

Deployments are the workhorses of Kubernetes, handling the heavy lifting of managing your application’s lifecycle.

4.4 Services: Exposing Your Applications to the World

So, your pods are running, but how do users access your application? That’s the job of a service. Because pods are temporary and their IP addresses can change, you need a stable way to connect to them. A service provides that stable endpoint.

A service acts like a load balancer or proxy, providing a consistent way to access your application, regardless of which pods are active. There are a few different types of services:

- ClusterIP: This creates an internal IP address within the cluster, which is helpful for communication between different parts of your application. It’s not accessible from outside the cluster.

- NodePort: This exposes your application on a specific port on each node’s IP address. You can then access your application from outside the cluster using any of the node’s public IP addresses and that specific port.

- LoadBalancer: This creates an external load balancer (if your cloud provider supports it). This gives you a single public IP address that distributes traffic across your pods. On a VPS, you might need to set up your load balancer (like Nginx or HAProxy) and then configure Kubernetes to use it.

Services provide a crucial layer of abstraction, ensuring that your application is always accessible, even as pods come and go.

5. Deploying a Simple Application on Kubernetes on VPS

This section will walk you through deploying a simple web application on your Kubernetes cluster running on a VPS. This practical example will solidify your understanding of the concepts we’ve discussed.

5.1 Preparing Your VPS Environment

Before deploying an application to your Kubernetes cluster on a VPS, you must ensure your environment is set up correctly. This involves a few key steps:

- Setting up your VPS: You should have already set up your VPS instances and installed the necessary Kubernetes components (kubeadm, kubelet, kubectl, and a container runtime like Docker or containers). If you haven’t, refer to our planned article on “Setting Up a Kubernetes Cluster on Your VPS” for detailed instructions.

- Verifying Kubernetes Cluster Health: Use the kubectl get nodes command to verify that all your nodes are Ready. This confirms that your cluster is functioning correctly.

- Installing Docker: While Kubernetes can use other container runtimes, Docker is still widely used and a good starting point. If you haven’t already, install Docker on your VPS nodes. Instructions on how to do this are in our article “Installing Docker on VPS.”

This preparation ensures your VPS environment is ready to receive and run your application.

5.2 Creating a Simple Docker Image

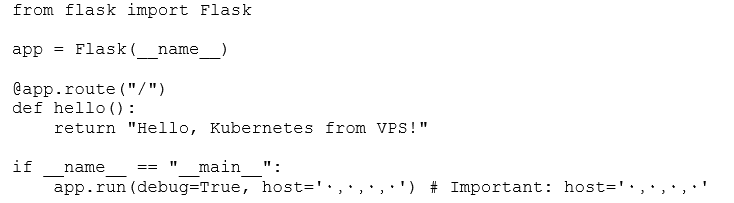

We’ll create a simple web application using Python and Flask for this example. This application will simply display “Hello, Kubernetes from VPS!” in the browser.

1. Create a app.py file:

Python

The host=’0.0.0.0′ part is crucial; This line is crucial because it tells the Flask application to listen for connections on all network interfaces within the container. This makes it accessible from outside the container.

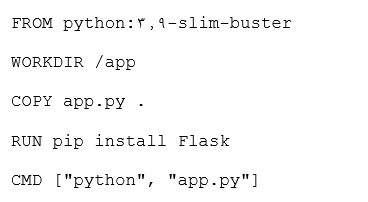

2. Create a Dockerfile:

Dockerfile

This Dockerfile uses a lightweight Python-based image, copies your application code, installs Flask, and sets the command to run the application.

3. Build the Docker image:

Bash

docker build -t my-kubernetes-app .

This command builds the Docker image and tags it as my-kubernetes-app.

For a more in-depth explanation of creating Docker images and Dockerfiles, please refer to our dedicated articles on Docker, such as “What is Docker?” and “Docker Container Components.”

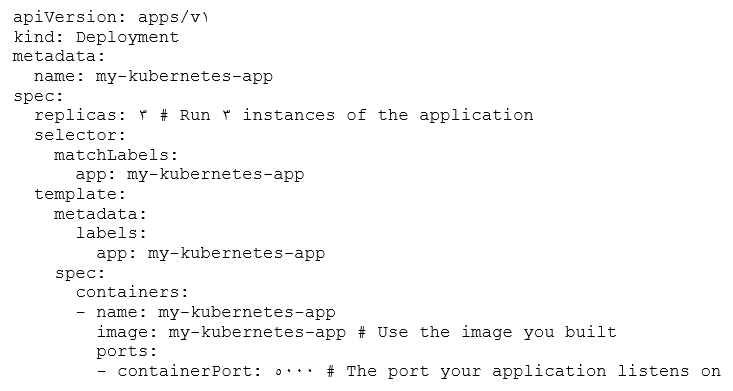

5.3 Creating Kubernetes Deployment and Service YAML Files

Now that we have a Docker image, we must create Kubernetes YAML files to define our deployment and service.

- Create a deployment.yaml file:

YAML

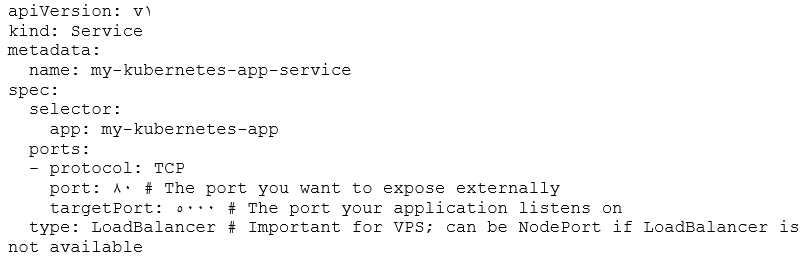

- Create a service.yaml file:

YAML

The type: LoadBalancer is particularly important for VPS setups. If your VPS provider doesn’t offer a native load balancer, you might need to use type: NodePort and set up a separate load balancer (like Nginx) to forward traffic to the NodePort.

5.4 Deploying and Accessing Your Application (H3)

With the YAML files created, you can now deploy your application:

- Apply the YAML files:

Bash

kubectl apply -f deployment.yaml

#This command tells Kubernetes to create the resources defined in your deployment.yaml file, effectively deploying your application to the cluster.

kubectl apply -f service.yaml

- Check the deployment and service status:

Bash

kubectl get deployments

kubectl get services

The type of LoadBalancer is significant for VPS setups. If your VPS provider doesn’t offer a native load balancer, you might need to use type NodePort and set up a separate load balancer (like Nginx) to forward traffic to the NodePort.

5.4 Deploying and Accessing Your Application

With the YAML files created, you can now deploy your application:

- Apply the YAML files:

Bash

kubectl apply -f deployment.yaml

#This command tells Kubernetes to create the resources defined in your deployment.yaml file, effectively deploying your application to the cluster.

kubectl apply -f service.yaml

- Check the deployment and service status:

Bash

kubectl get deployments

kubectl get services

- Access your application: If you used type: LoadBalancer, the kubectl get services command will show you an external IP address. Access this IP address in your browser to see your application. If you use type NodePort, you’ll see a port assigned to each node. Access your VPS’s public IP address along with this port to reach your application.

Congratulations! You’ve successfully deployed a simple application on your Kubernetes cluster running on a VPS. This practical example gives you a basic understanding of how Kubernetes works in a real-world scenario. Remember to consult the official Kubernetes documentation for more advanced configurations and features. Explore our other article about “Docker commands” for a more thorough look at commands and what each do.

Conclusion

As containerization revolutionizes software deployment, Kubernetes has emerged as the leading orchestration platform. This Hostomize guide has demystified Kubernetes, explaining its core concepts and highlighting the strategic advantages of running it on a VPS. From cost savings and enhanced control to seamless scaling and improved resource utilization, Hostomize VPS offers a compelling platform for harnessing the power of Kubernetes. Whether you’re building microservices, deploying web applications, or exploring new possibilities in container orchestration, Hostomize is here to support your journey. Dive deeper into the world of Kubernetes with Hostomize VPS and unlock the future of application deployment.